Is Spark Down?

Are you experiencing issues with your Spark application? Has it suddenly stopped working, leaving you in a state of confusion and frustration? Before you panic, let’s delve into the various aspects that could lead to a Spark application being down. This comprehensive guide will help you understand the potential causes, troubleshooting steps, and preventive measures to ensure your Spark application runs smoothly.

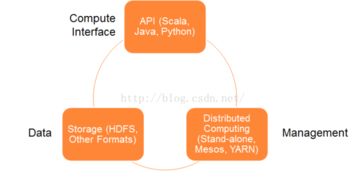

Understanding Spark’s Architecture

Spark is a distributed computing system that provides an interface for programming entire clusters with implicit data parallelism and fault tolerance. It consists of several key components, including the Spark Driver, Spark Executor, and Spark Context. Understanding how these components interact is crucial in identifying the root cause of a Spark application being down.

| Component | Description |

|---|---|

| Spark Driver | The driver is the main process that runs on the master node. It coordinates the execution of tasks, manages the Spark Context, and handles input/output operations. |

| Spark Executor | The executor is a process that runs on worker nodes. It executes the tasks assigned by the driver and manages memory and CPU resources. |

| Spark Context | The Spark Context is the main interface to Spark’s functionality. It manages the distributed storage and computation of the application. |

Common Causes of Spark Application Downtime

Several factors can contribute to a Spark application being down. Here are some of the most common causes:

-

Network Issues: Network connectivity problems between the master node and worker nodes can cause the Spark application to fail. This can be due to network congestion, firewall rules, or hardware failures.

-

Resource Allocation: If the Spark application is not allocated enough resources, it may run out of memory or CPU, leading to a crash. This can happen if the cluster is under heavy load or if the application is not optimized for resource usage.

-

Spark Configuration: Incorrectly configured Spark parameters can lead to application failures. This includes issues with memory settings, storage configurations, and other critical parameters.

-

Task Failures: If a task fails during execution, it can cause the entire Spark application to fail. This can be due to data corruption, hardware failures, or software bugs.

-

Spark UI Issues: If the Spark UI is not accessible, it can be an indication that the Spark application is down. This can be caused by a misconfiguration or a problem with the web server.

Troubleshooting Steps

When your Spark application is down, follow these troubleshooting steps to identify and resolve the issue:

-

Check Network Connectivity: Ensure that there are no network issues between the master node and worker nodes. Verify that the firewall rules allow communication between the nodes.

-

Monitor Resource Usage: Use tools like Ganglia, Prometheus, or Spark’s web UI to monitor the resource usage of your Spark application. Check if the application is running out of memory or CPU.

-

Review Spark Configuration: Verify that the Spark configuration parameters are correctly set. Check for any misconfigurations that could be causing the application to fail.

-

Check Task Failures: Inspect the logs of failed tasks to identify the cause of the failure. This can help you pinpoint the root cause of the issue.

-

Review Spark UI: If the Spark UI is not accessible, check the logs of the web server to identify the cause of the problem. This can help you determine if the issue is related to the web server or Spark configuration.

Preventive Measures

Preventing Spark application downtime requires proactive measures. Here are some tips to help you avoid future issues:

-

Optimize Resource Allocation: Ensure that your Spark application is allocated enough resources to run efficiently. Monitor resource usage and adjust the allocation as needed.

-

Use Reliable Network Infrastructure: Invest in a reliable network infrastructure to minimize