Spark Create: A Comprehensive Guide to Spark’s Creation and Capabilities

Are you intrigued by the power of Apache Spark and its ability to process large-scale data? Have you ever wondered how Spark was created and what makes it so unique? Look no further! This article will delve into the creation of Spark, its architecture, features, and applications. By the end, you’ll have a comprehensive understanding of what Spark is and how it can revolutionize your data processing needs.

Origins of Spark

Apache Spark was created by Matei Zaharia at UC Berkeley’s AMPLab in 2009. It was initially designed as a general-purpose distributed computing system that could handle both batch and interactive data processing tasks. Spark quickly gained popularity due to its ease of use, high performance, and ability to work with various data sources.

Architecture of Spark

Spark’s architecture is designed to be scalable and efficient. It consists of several key components:

| Component | Description |

|---|---|

| Spark Core | The core of Spark, providing distributed storage and computation capabilities. |

| Spark SQL | Spark’s module for structured data processing, providing a SQL-like interface for querying data. |

| Spark Streaming | Spark’s module for real-time data processing, enabling the processing of data streams. |

| MLlib | Spark’s machine learning library, providing various machine learning algorithms and utilities. |

| GraphX | Spark’s graph processing library, enabling the analysis of graphs and social networks. |

Features of Spark

Spark offers several features that make it a powerful tool for data processing:

- Speed: Spark is significantly faster than traditional data processing systems like Hadoop MapReduce, especially for iterative algorithms.

- Scalability: Spark can scale to handle large datasets, making it suitable for big data applications.

- Flexibility: Spark supports various data sources, including HDFS, Cassandra, HBase, and Amazon S3.

- Resilience: Spark provides fault tolerance through its resilient distributed dataset (RDD) abstraction.

- Interactive Querying: Spark SQL allows for interactive querying of data, making it easier to explore and analyze data.

- Machine Learning: MLlib provides a wide range of machine learning algorithms and utilities, enabling users to build and deploy machine learning models.

Applications of Spark

Spark has a wide range of applications across various industries:

- Finance: Spark is used for fraud detection, risk management, and algorithmic trading.

- Healthcare: Spark is used for analyzing patient data, identifying disease patterns, and improving patient outcomes.

- Marketing: Spark is used for customer segmentation, personalized recommendations, and targeted advertising.

- Manufacturing: Spark is used for predictive maintenance, supply chain optimization, and quality control.

- Government: Spark is used for analyzing large datasets, such as election data, crime statistics, and environmental data.

Getting Started with Spark

Getting started with Spark is relatively straightforward. Here’s a step-by-step guide:

- Install Spark: Download and install Spark from the Apache Spark website.

- Set up a cluster: Set up a Spark cluster on your local machine or a cloud platform.

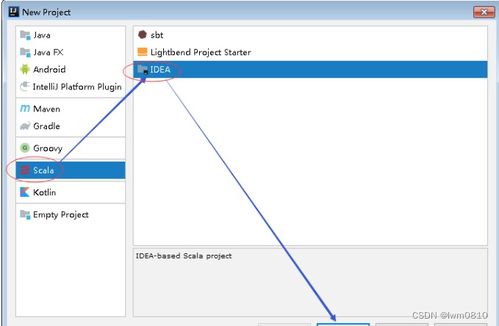

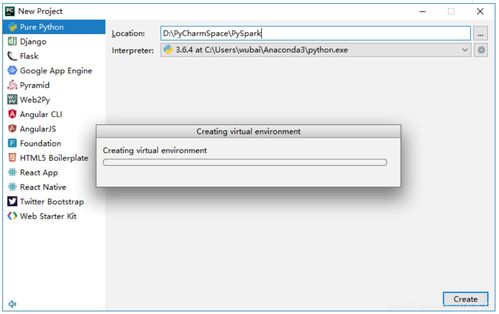

- Write a Spark application: Write a Spark application using Scala, Python, or Java.

- Run your application: Run your Spark application on the cluster.