Unity AR Foundation Face Tracking: A Comprehensive Guide for You

Are you intrigued by the possibilities of augmented reality (AR) and looking to dive into the fascinating world of face tracking? Unity AR Foundation Face Tracking is a powerful tool that allows developers to create immersive AR experiences by tracking and analyzing facial expressions. In this detailed guide, we will explore the ins and outs of Unity AR Foundation Face Tracking, helping you understand its features, implementation, and potential applications.

Understanding Unity AR Foundation Face Tracking

Unity AR Foundation Face Tracking is a part of the Unity AR Foundation package, which provides a comprehensive set of tools for building AR applications. This package includes features like plane detection, feature point tracking, and face tracking. In this article, we will focus on the face tracking aspect, which enables developers to track facial expressions and movements in real-time.

Face tracking in Unity AR Foundation is based on the OpenCV library, which is a widely-used computer vision framework. It utilizes a combination of camera input and machine learning algorithms to accurately track facial features and expressions. This allows developers to create interactive AR experiences that respond to the user’s facial movements and expressions.

Setting Up Unity AR Foundation Face Tracking

Before you can start using Unity AR Foundation Face Tracking, you need to set up your Unity project. Here’s a step-by-step guide to help you get started:

- Open Unity Hub and create a new Unity project.

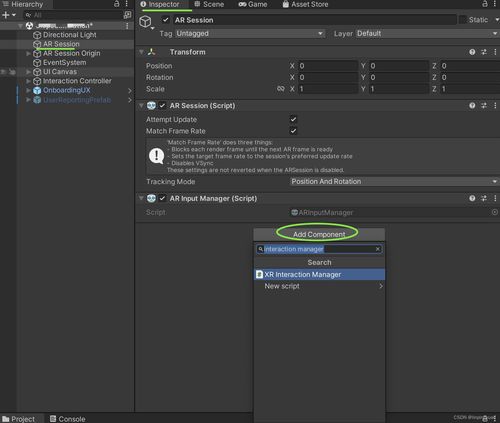

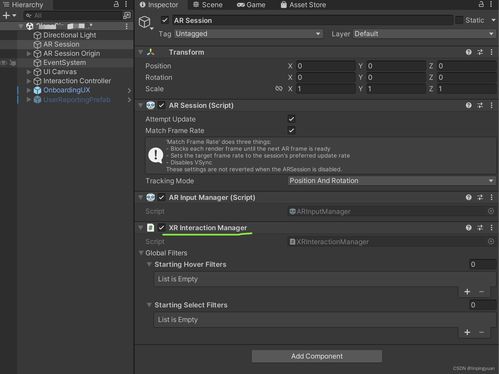

- Install the AR Foundation package by going to the “Package Manager” window and searching for “AR Foundation”.

- Once the package is installed, go to “Window” > “AR Foundation” > “Face Tracking” to enable face tracking in your project.

- Make sure you have a compatible camera on your device, as face tracking requires a camera input.

With the AR Foundation package and face tracking enabled, you are now ready to start implementing face tracking in your Unity project.

Implementing Face Tracking in Unity

Implementing face tracking in Unity involves a few key steps. Here’s a brief overview of the process:

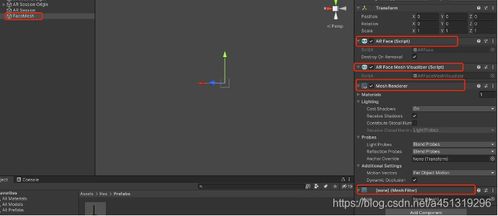

- Import the AR Foundation Face Tracking package into your project.

- Set up the camera to capture the user’s face. This can be done by adding a camera component to your scene and configuring it to capture the desired field of view.

- Initialize the face tracking system by creating an instance of the ARFaceTrackingManager class.

- Subscribe to the face tracking events to receive updates on the user’s facial expressions and movements.

- Implement the desired behavior based on the face tracking data.

Here’s an example of how you can initialize the face tracking system in your Unity script:

using UnityEngine;using UnityEngine.XR.ARFoundation;public class FaceTrackingExample : MonoBehaviour{ public ARFaceTrackingManager faceTrackingManager; void Start() { faceTrackingManager = FindObjectOfType(); faceTrackingManager.faceTrackingUpdated += OnFaceTrackingUpdated; } void OnFaceTrackingUpdated(ARFaceTrackingEvent eventArgs) { // Handle face tracking updates here }} Using Face Tracking Data

Once you have implemented face tracking in your Unity project, you can start using the face tracking data to create interactive AR experiences. Here are some common use cases for face tracking data:

- Facial Expression Recognition: Detect and respond to the user’s facial expressions, such as smiling, frowning, or raising an eyebrow.

- Facial Feature Tracking: Track the position and orientation of facial features, such as the eyes, nose, and mouth, to create realistic AR characters or avatars.

- Facial Motion Tracking: Track the user’s facial movements and use them to control the behavior of AR objects or characters.

Here’s an example of how you can access the face tracking data in your Unity script:

using UnityEngine;using UnityEngine.XR.ARFoundation;public class FaceTrackingExample : MonoBehaviour{ public ARFaceTrackingManager faceTrackingManager; void Start() { faceTrackingManager = FindObjectOfType(); faceTrackingManager.faceTrackingUpdated += OnFaceTrackingUpdated; } void OnFaceTrackingUpdated(ARFaceTrackingEvent eventArgs) { ARFace face = eventArgs.face; if (face != null) { // Access